My mother, Dorothy Schroeder, would have turned 92 today. She was an amazing woman—more amazing than the words below can convey.

She was born Dorothy Alice Schneider, to parents Otto and Marcella (née Beckemeier). Her grandparents on both sides were German immigrants who settled in north St. Louis in the late 1800s. Dorothy grew up in what was then a bustling urban neighborhood, within walking distance of Sportsman’s Park and Bethlehem Lutheran Church. The church was the social hub for all the Lutherans in the neighborhood, and ran a parochial school that Dorothy attended through eighth grade. The neighborhood also had a strong contingent of Catholics, but I remember Mom saying that the Catholic and Lutheran kids were discouraged from playing together. She attended a public high school, though, and grew up to believe that people of all religions are fundamentally good.

Otto Schneider, my grandfather, worked his whole career as a bookkeeper for Rice-Stix dry goods company. Marcella, my grandmother, was a homemaker who never learned to drive. Neither of them graduated from high school, though I remember my grandfather talking about “business college,” where he had learned his bookkeeping skills. In any case, Otto seems to have managed to support his family through the Great Depression with little hardship.

Mom had two brothers: Donald, two years older, who attended the state engineering college in Rolla, served in the Korean War, and became a chemical engineer; and Norman, two years younger, who became a Lutheran minister. But when Dorothy graduated from high school with high grades in 1948, it was apparently understood that she, unlike her brothers, would not be attending college. Perhaps that was just the norm among the families in her social class, or perhaps the colleges were so flooded with young men on the GI Bill that it was difficult for women to be admitted.

So she took a job as a secretary at Concordia Publishing House, the big Lutheran publishing company across town. There she met my father, Vernon Schroeder, an ordained minister who hadn’t lasted long as pastor to a congregation but had gotten a job answering mail for a popular radio preacher. Dorothy and Vernon were married at Bethlehem Church in September 1949, when she was 19 and he was about to turn 32. She changed the middle three letters of her last name.

The Schroeder newlyweds then moved to Chicago, where my father pursued his dream of running a “tract mission,” collecting and distributing religious pamphlets among multiple protestant denominations. He never made any real money at it, so it fell upon my mother to be the main breadwinner. She was smart, hard-working, and just plain capable. Her longest job during those years was running a Christian bookstore.

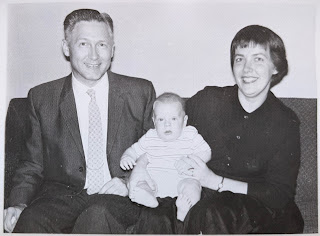

After seven years in Chicago, the Schroeders gave up on the tract mission and moved back to St. Louis. Dad got a job as a proofreader at Concordia Publishing House, while Mom found secretarial work until, rather unexpectedly, I came along in 1962. My brother Paul followed in 1964, and Mom stayed home, now in the suburb of Webster Groves, raising the two of us until we were both in school.

I don’t remember those years well at all, but I think they were happy ones for Mom. My parents later told me they had always wanted children and were disappointed when none arrived sooner. Dorothy was a devoted mother who took tremendous interest in everything Paul and I did. She showered us with gifts for our birthdays and Christmas, and encouraged us to learn and grow in countless ways. She filled the house with books that she hoped would interest us, and read aloud to us until we were old enough to read on our own. She had the old upright piano moved from her parents’ house so I could practice on it. She enrolled Paul in sports leagues so he could develop his remarkable athletic talent.

Soon after Paul started first grade, Mom went back to working outside the home. We needed the money, with Dad in a dead-end job that didn’t pay well. But more importantly, Mom needed a new challenge—and a distraction from what had become an unhappy marriage.

Fortunately, we lived a mile and a half from Webster College, a former Catholic women’s school that had gone co-ed and secular during the 1960s. They hired Mom as secretary to the Director of Publications, whose job was to manage the design and printing of everything from event advertisement posters to the college catalog.

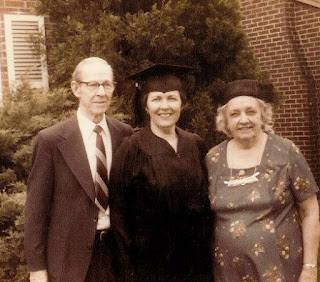

It wasn’t long before Mom was doing essentially all of her boss’s job. Within a few years he was gone and she was running the office—with neither the job title nor the salary that the responsibilities called for. But working at the college did offer one important perk: she could enroll in classes tuition-free and work toward her bachelor’s degree, building on some credits she had already earned at the local community college and through CLEP. She graduated in 1979 with a major in religion. Then, degree in hand, she demanded and got a job title and a raise.

As the college grew to become Webster University, Mom’s office expanded until she was supervising a team of about half a dozen. Her coworkers became some of her best friends, and she also interacted with numerous contractors for design, typesetting, and printing. She loved working with people and was extremely good at it, though she had little tolerance for laziness or incompetence.

Then at age 63, as she was starting to look forward to a well-earned retirement, Mom was diagnosed with late-stage ovarian cancer. That abruptly ended her career at Webster University, where she used up a year of accrued sick leave before transitioning to disability benefits. Surgery and chemotherapy gave her three more years of life, most of which she spent getting the house ready to sell and otherwise making sure things would go as smoothly as possible for the rest of us after she was gone.

As should be apparent by now, Mom’s faith was a huge part of her life. It sustained her through the hard times, and underlay her work ethic and endless goodwill toward the people around her. It also steered her toward the political left during the era of civil rights campaigns and the Vietnam War, as she stuck to her belief in a God of peace who loves all humans equally. Eventually she parted ways with the Missouri Synod Lutherans, whose doctrine had become more narrow and exclusive. Mom just couldn’t conceive of a God who would condemn most of humanity to an eternity of torture merely for their nonbelief. During her last two decades she attended more liberal Protestant churches.

Although Mom’s life was often stressful and much too short, she had a positive outlook and never spoke of any regrets. Her style was to throw herself into whatever work was before her, taking pride in that work and finding joy in the process. She was always conscious of how being a woman had put her at a disadvantage in her education and career, and she would stand up to that kind of injustice when she saw an opportunity to make a difference. But she was never bitter about the lot she had drawn, even if she had every right to be.

Naturally I wonder how her life might have played out if she’d had more opportunities. What if as a young woman she had gone to college and been able to plan her own career, rather than devoting herself to supporting her husband’s dreams? Or what if she’d had a decade or two of active retirement, to take on an ambitious volunteer project or develop her ability as a writer? She had the intelligence, skills, and work ethic to accomplish the sorts of things that our society finds memorable. Yet she wasn’t ambitious on her own personal behalf, and her interests tended to be more broad than deep, so perhaps she would have kept a low profile and simply found more ways to support the people around her.